A primer on voice assistants and the technology behind them

It was early in 2015 and I was at my desk, which happened to be positioned close to the front door of my company’s office in San Francisco’s SoMA district.

A courier had just left a stack of Amazon boxes on a nearby table and people in the office started milling around, grabbing up their respective deliveries. As one particular coworker reached for a box with his name on it he looked over at me and said, “Alexa, what’s the weather today?”

“Huh?” I replied, confused as to why someone would ask me what it was like outside when we were sitting a mere 15 feet from floor to ceiling windows.

He chuckled and tapped the tape across the Amazon box, the strip of adhesive advertising the ‘Echo,’ Amazon’s latest foray into the hardware space.

“Amazon’s new voice assistant…she’s called Alexa. Pretty funny, right?”

Oh yes. Hilarious.

I decided right then and there that I was never hopping on the voice train. 90% of the time ‘Siri’ didn’t know what I was talking about and now this new kid on the block had stolen my name, setting me up for endless ‘Alexa’ jokes that I would be forced to weakly smile at for all eternity.

No thank you, voice, no thank you.

Of course, the rest of the world wasn’t as salty about the introduction of Alexa as I was. The usability of voice has improved dramatically over the last four years and voice assistants are now the hottest new gadgets on the market.

According to Canalys, the number of worldwide smart speakers installed is set to grow 82.4% in 2019, surpassing 200 million units. To put that in perspective, 216.76 million iPhones were sold in 2017, and that was after 10 years on the market.

In addition, Grand View Research estimates that the global speech and voice recognition market size is estimated to reach USD 31.82 billion by 2025, fueled by rising applications in the banking, healthcare, and automobile sectors.

Voice is happening, and in case you too have been holding out on embracing the act of conversing with an inanimate object, let me catch you up to speed.

In this piece, we’re going to cover what voice technology is, how it works, who uses it, who is developing it, where it’s being used, and what we’re using it for. We’ll also take a look at what makes some consumers wary of adopting voice, like privacy concerns and apprehension around how voice assistant usage may lead to a lapse in common courtesy.

Hello, Computer

Voice technology is a field of artificial intelligence (AI) focused on developing and perfecting verbal communication between humans and computers.

Though attempts at developing a verbally communicative relationship with machines have been taking place since the 1950s, it was only in the last 10 years that we got anywhere close to what Gene Roddenberry imagined when coming up with the voice-controlled computer utilized on the Starship Enterprise.

Talking to inanimate objects used to be a behavior that would cause those around you be concerned for your mental health, however, these days controlling and interacting with your computer (or smartphone, speaker, watch, car, or microwave) through speech is fairly mainstream. Consumers to some extent have even come to expect a voice option, and take its availability as a feature into consideration when researching a purchase.

For instance, a recent survey of smart speaker owners performed by J.D. Power revealed that “59% of U.S. consumers said they were more likely to purchase a new car from a brand that supports their favored smart speaker voice assistant.”

That’s right, having Alexa onboard is right up there with heated seats and leather upholstery.

Beyond being a fun accessory or add-on, today’s voice tech services have many practical applications. Not to reveal myself as too much of a Trekkie (or Trekker?), but another piece of Starfleet technology affiliated with voice that has debuted in the real world is the ‘universal translator’.

Products on the market today such as Google’s Pixel Buds can translate 40 different languages in real-time, a feat that would’ve seemed impossible just a few years ago. Google announced last fall that the same functionality would now be available across all Google Assistant-enabled headphones and Android phones.

With voice turning from science fiction to just plain science, it does make one wonder exactly how the technology works.

Looking under the hood

First thing’s first. Just like talking to another person, in order for each side to understand one another, you need to be speaking the same language (or you need to understand their language and you theirs).

Automatic Speech Recognition (ASR) is what makes this possible. It’s the process of turning human speech into something a computer can understand. The operation is much more complex than you might expect, so to keep it very high level, it goes something like this:

- A person speaks into a microphone

- The sound is processed into a digital format

- The computer breaks the speech down into phones, bits of sound that correspond to letters –a cousin of the phoneme

- Then the computer uses methods of pattern recognition and statistical models to predict what was said (Source)

This, of course, is just the most basic form of speech recognition, essentially providing the building blocks for activities like dictation or speaking your credit card number into an automated phone system. If you actually want to converse with a computer, the computer needs to not only hear the sounds you’re speaking but also understand them and their meaning — it needs context

Natural Language Processing (NLP), and its related subset, Natural Language Understanding (NLU), are a subfield of Artificial Intelligence (AI) that focuses on helping humans and computers converse naturally, while also comprehending the true meaning and intent of the speech.

If we can anthropomorphize a bit, computers are quite literal. Their human counterparts, on the other hand, tend to use language more figuratively. For instance when we tell a computer that we’re ‘killing it today,’ the computer likely thinks that someone or something is in mortal peril. (Source) Given the problems literal speech can cause, computers being taught the probability of a specific intent or context is imperative to a smooth voice experience.

Successful voice tech also hinges on the computer’s ability to learn and grow in its language abilities. This is accomplished through Machine Learning and its subset, Deep Learning, which use algorithms and statistics to analyze speech data to continuously improve computer performance of a specific task, which in this case is human conversation.

There’s a lot more to the puzzle of translating human speech to computers such as tackling the issues of audio quality, individual speech patterns, and accents, the last two being particularly important when creating a technology that will be used by a wide array of speakers.

Who uses voice technology?

Everyone, really.

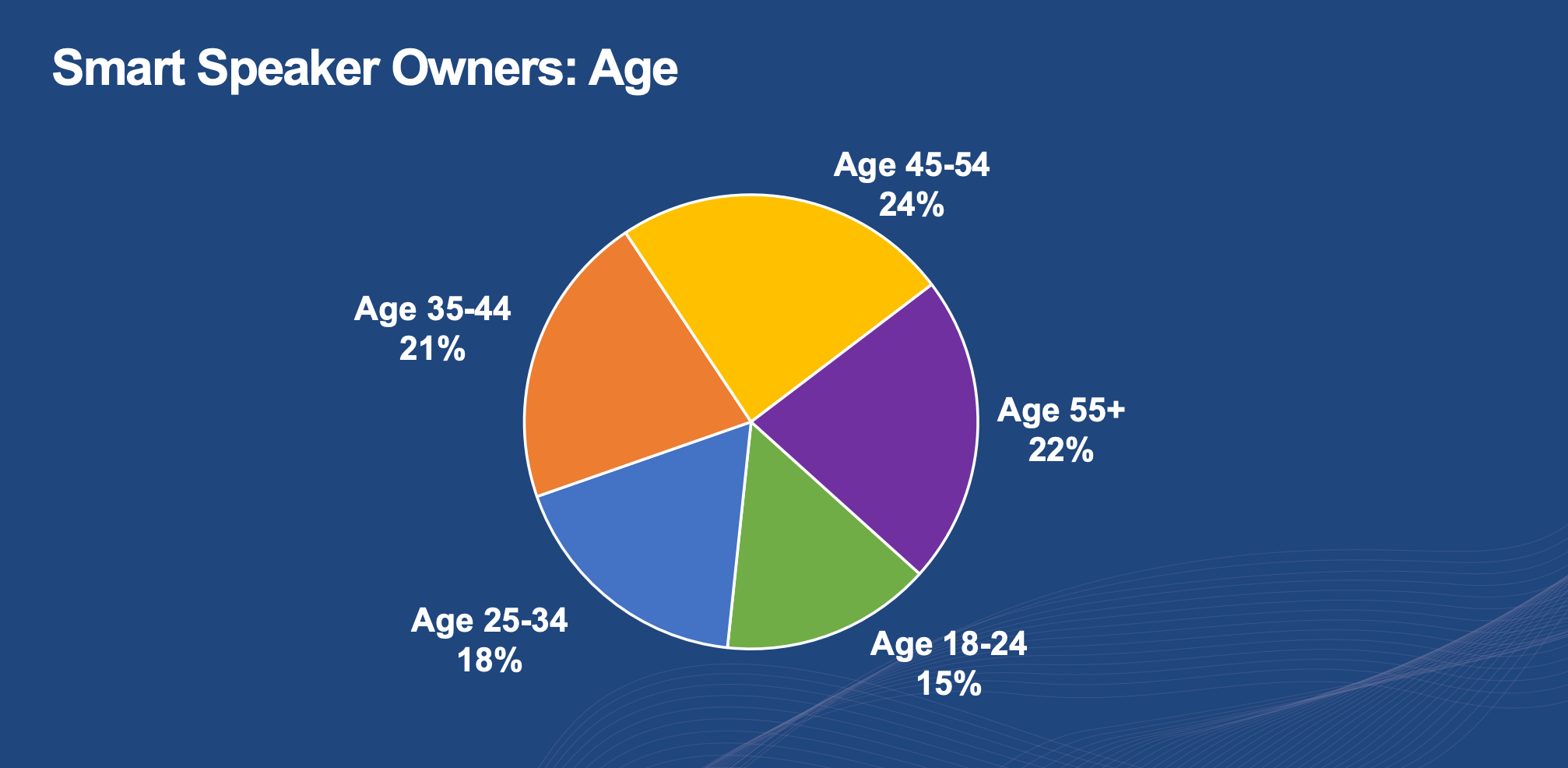

The Spring 2018 Smart Audio Report from NPR and Edison showed that smart speaker ownership is evenly distributed across all adult generations, with women being a little more likely to take the smart speaker plunge than their male counterparts.

When it comes to those younger than 18, they like voice too. This year eMarkter expects “1.5 million kids — those ages 11 and younger — to use a smart speaker like Amazon Echo or Google Home, at least once a month. By 2020, that figure will grow to 2.2 million.” Children rely on voice assistants to play audio, tell bedtime stories, and even help them brush their teeth. Parents also like voice as a choice for children as it provides an alternative to screen-time, a concern that has been a hot topic in recent years.

When it comes to teen usage, eMarketer estimates that 3 million teens will use a smart speaker at least once a month in 2019. Teens aren’t heavy users of smart speakers due to increases in independence during the stage of life, however as avid smartphone users, teens are still likely to utilize voice tech on their mobile devices.

Who is developing voice technology?

Again, everyone.

All of technology’s biggest players are in the game, each with their own named, personified assistant.

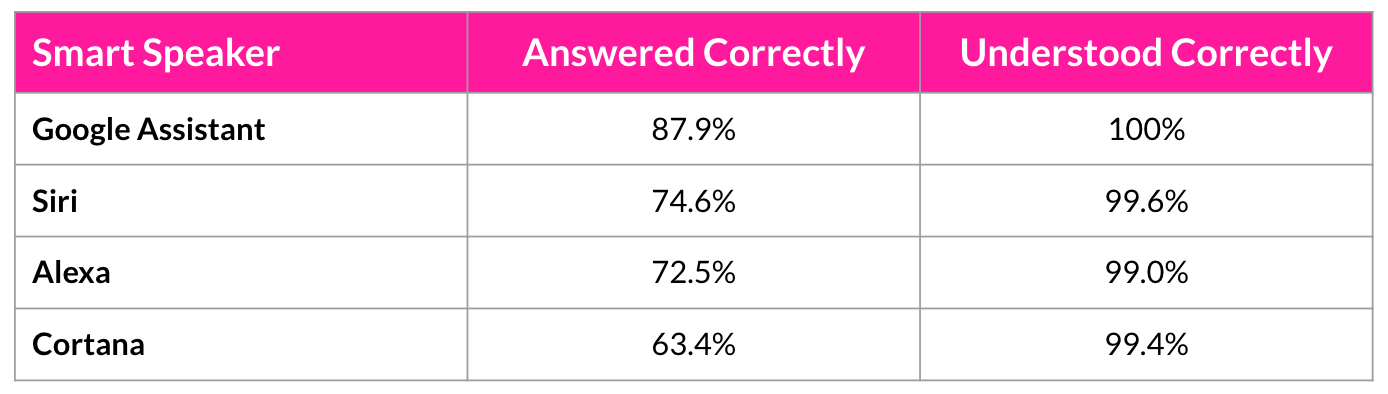

As far as which is the best, that is up for debate. LoupVentures’ Annual Smart Speaker IQ Test compares the four main assistants (Google Assistant, Siri, Alexa, and Cortana) by asking them all the same 800 questions and seeing which performs the best.

Annual Smart Speaker IQ Test by Loup Ventures

In the most recent results, we learn that overall, Google Assistant is the strongest of the four assistants, but Siri is no slouch either.

You’d expect Alexa to be best at shopping, but the Amazon assistant answered only 52% of the commerce category questions correctly, compared to Google’s 86%. Cortana didn’t seem to be much competition at all, answering only 63.4% of the questions correctly and having its best showing as coming in third in the ‘information’ category.

Apart from accuracy, a survey of U.S. adults revealed that “How well [a voice assistant] understands me…” is the most important factor for users when forming voice assistant preferences. This means that in the end, you’re likely to adopt whatever technology understands you and provides the most seamless user experience.

Some named assistants coming out of Silicon Valley aren’t being marketed as end-user solutions themselves, but instead, are leveraged by other innovators to provide voice offerings that answer specific consumer needs. For instance, Audioburst relies on the power of IBM Watson’s NLU to understand the massive amounts of audio content we process each day.

Of course, if Watson’s acute understanding of human language isn’t exciting enough for you, he also won Jeopardy! in 2011. So there’s that.

Why do we want to use voice tech?

It’s personal. Given how close all of us are to our technology these days, it’s not surprising we’d want to personify it and have our communication take place in a more straight-forward way. It is, after all, the most natural form of communication, predominate when looking back at human history. Voice assistants have names, distinct programmed personalities, and are designed to make our lives easier.

That addition of ease in the navigation of daily tasks is likely the largest draw to voice technology.

Today’s society thrives on packing in as much into our days as humanly possible. We work, go to school, exercise, commute, cook, clean, socialize, shop, care for our loved ones, and maybe if we have enough time left over, we might also work in getting some sleep. In a world of constant multi-tasking and transitions from one context to the next, having our hands and eyes free can be a lifesaver.

The ability to utilize the benefits of technology through voice also aids accessibility. Lack of sight, dexterity, or literacy are no longer barriers to using and benefitting from computers and smart devices, leading to increases in independence and self-sufficiency.

Lastly, voice tech is just fun! Voice puts entertainment at the tip of our tongues, from trivia games to listening to streaming audio, to annoying our cats with smart speaker skills that are fluent in ‘meow’.

Where is voice technology currently used?

Geographically

As one would expect, with the main development players based locally, voice adoption is strong in the United States. According to the March 2019 Smart Speaker Consumer Adoption Report, the percentage of U.S. adults that own smart speakers rose 40.3% in 2018, climbing from 47.3 million to 66.4 million during the year, and that’s not even counting all of the voice commands being aimed at smartphones and in-car solutions, whose usage tends to increase with a person’s exposure to smart speakers.

While the numbers here in the States are impressive, the rest of the world is catching up. Australia, while technically coming in with a lower amount of adults (5.7 million) using smart speakers, has a “user base relative to the population [that] now exceeds the U.S.” (Source)

Meanwhile, China is expected to see a 166% growth in the installed base for smart speakers going from 22.5 million units in 2018 to 59.9 million in 2019. (Source)

Now that we know where in the world voice technology is being embraced, it’s time to talk about the everyday locations where voice is popping up.

In Daily Life

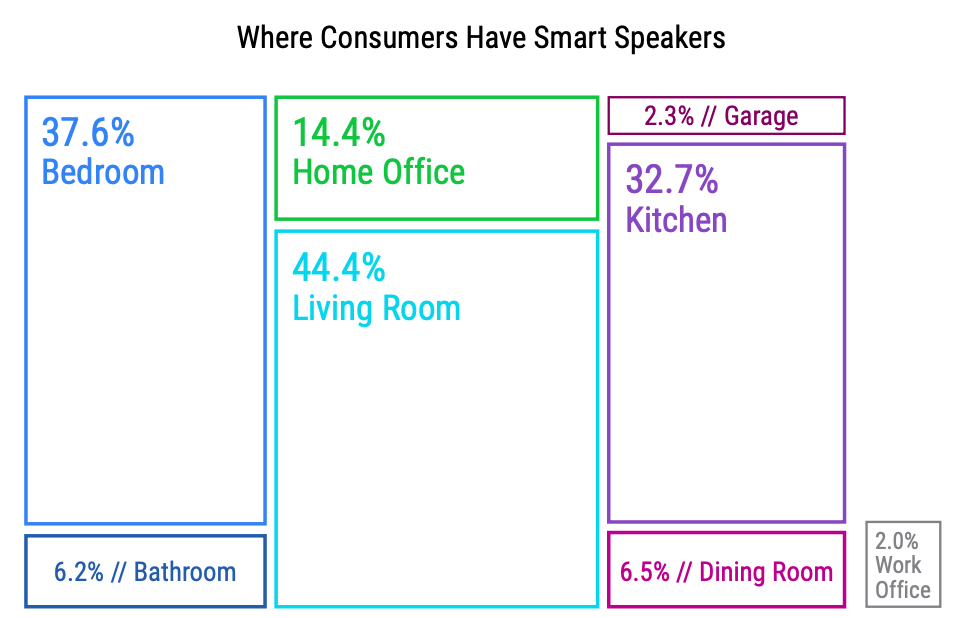

According to the Voice Assistant Consumer Adoption Report, users have fully embraced the use of voice in the home with “over 40% of smart speaker owners now having multiple devices, up from 34% in 2018.”

This suggests that more and more users are finding it useful to have a voice assistant available regardless of where they are in the home at any given time.

The report also sheds light on which rooms are most likely to include a smart speaker. Use in the living room is the most popular by far (44.4% of users), but the bedroom (37.6% of users) and kitchen (32.7% of users) are also proving to be useful spots for voice interaction.

Outside of the home, it’s no surprise that with hands and eyes occupied, the car is one of the most popular spots in which to interact with voice tech.

“Nearly twice as many U.S. adults have used voice assistants in the car (114 million) as through a smart speaker (57.8 million). The car also claims far more monthly active voice users at 77 million compared to 45.7 million.” (Source)

Probably the most omnipotent location for voice is our phones.

Google’s ‘Voice Search’ and Apple’s ‘Siri’ debuted on our phones around a decade ago and were the first glimpses into what a voice-controlled world might look like. Mobile voice tech accuracy and usefulness have grown significantly through time and as of 2019, 70.2% of adults in the United States have given voice a try via their phones (Source).

Voice is with us wherever we go, which might lead one to wonder: What exactly are we doing with it?

Voice tech applications

We mentioned Google’s Pixel Buds earlier in the piece; however, real-time translation is only one way that voice is applied outside of the standard voice assistant.

In an article for Machine Design, use cases were laid out for voice tech in the medical field. For example, during an exam, “having a listening device in the room with [a] patient has a lot of potential for capturing clinical notes, identifying billing codes, or even providing clinical decision support during the encounter,” freeing up the doctor to focus not on paperwork, but on the person whom they’re treating.

Another space where voice is getting interesting is Customer Service.

Most people can relate to the frustration of having a poor call with a customer service department, or even worse, their automated voice system.

However, with the advent of emotion recognition software, friction-filled support calls may be a thing of the past — it can “recognize customer emotion by considering parameters such as the number of pauses in the operator’s speech, the change in voice volume, and the total conversation time in order to do a real-time calculation [of] a Customer Satisfaction Index.” (Source)

There are many different ways emotion recognition technology could be implemented in a real-time support interaction.

For instance, knowing whether a customer is upset might allow a voice system to switch their approach with the customer, or perhaps transfer them to a human representative when the computer ‘senses’ that a call may be going south.

Customer Support solutions aren’t the only application of emotion recognition either.

For instance, we use it here at Audioburst to help determine the context and intent of new audio when it is imported into our system. Knowing whether a specific piece of content is happy, sad, angry, anxious, or even just neutral plays a vital role in providing personalized listening experiences, tailored to our users’ environment, interests, and personalities.

How consumers are using voice assistants

Use cases for voice assistants can essentially be broken down into two categories, search and commands.

- Search covers queries like asking a general question, checking the weather, news, or a sports team’s current standings. These queries require the voice assistant to understand the true intent and context of the question, and then externally seek and return the correct answer.

- Commands are much more straightforward, including tasks like setting an alarm, making a phone call, or streaming audio from a chosen app.

The top three uses of smart speakers are asking general questions, streaming music, and checking the weather, with over 80% of smart speaker owners having tried these functionalities at least once. The above report notes one interesting piece of data from the use case results: smart home control is ninth in terms of whether or not a user has ‘ever tried’ the feature but fourth for daily active use.

Read: while not everyone has smart home devices such as lights or thermostats, those who do are likely to use their smart speaker to control them every day.

In-Car, voice assistants are used to take care of tasks like making a phone call, texting, asking for directions, and queueing up audio entertainment selections. These tasks would otherwise require the driver to pull over or take their attention away from the act of driving.

With many municipalities invoking ‘hands-free’ laws for drivers, the functionality is not only convenient but imperative.

Beyond using smart speakers at home and embracing in-car assistants, voice technology can also be found scattered throughout daily life. Taking orders at restaurants and even providing concierge services at hotels. New uses are emerging every day and it’ll be interesting to see where voice will install itself next.

Despite this rapid adoption, there is still some hesitancy

Privacy continues to be a serious issue for smart speaker use.

There are reports of personal conversations being unintentionally sent to random people in a user’s contact list, Alexa maintaining a record of every conversation you’ve ever had with the device (you can delete it), incidents of Alexa spontaneously laughing (not a privacy risk, but definitely creepy!), and the recent revelation that Amazon employees are actively listening to user conversations.

However, as alarming as these examples may sound, privacy concerns don’t seem to be stopping people from buying smart speakers.

Voicebot points out that though ⅔ of U.S. consumers have some amount of concern over privacy issues with smart speakers, it doesn’t necessarily appear to be a barrier to purchase and adoption.

“The privacy concerns for all consumers and those that do not own smart speakers are nearly identical. For example, only 27.7% of consumers without smart speakers said they were very concerned about privacy issues compared to 21.9% of device owners. This means that even some consumers with privacy concerns went ahead and purchased smart speakers.”

There are also concerns that barking orders at smart assistants all day may be making us forget our manners.

While the concern is for both adults and children, when it comes to kids, tech companies themselves are putting some behavior modification solutions into play in the form of ‘please and thank you’ apps and skills. There are even camps that are vehemently opposed to showing artificial intelligence the same courtesies we show fellow human beings.

Conclusion

The fine details of how we’ve taught computers to listen to, understand, and respond to our spoken word may be complex and overwhelming, but the usage itself is not. Despite concerns about privacy and etiquette, the benefits of easily accessed information and media, ordered in a hands-free, screen-independent way do seem to outweigh the negatives.

Voice is changing how we interact with the world.

The technology is improving at record speeds and all eyes are on the space. It’s exciting to think about how additional research and data, as well as advances in technology and understanding, will influence voice communication’s ability to grow and evolve.

As for me, I’ve been the subject of approximately 4,782 Alexa jokes since that initial jab in 2015. While I know they are intended to be funny, I still can’t suppress my instinct to sigh and roll my eyes, reminiscing about the good old days when no one had ever heard of the name Alexa.

That being said, as I sit here at my desk, preparing to go to bed, I’ve found myself lifting my phone to quietly ask Siri to set my alarm for 7 am and to turn off the lights.

I must’ve gotten on that voice train after all.